- AutoEncoder

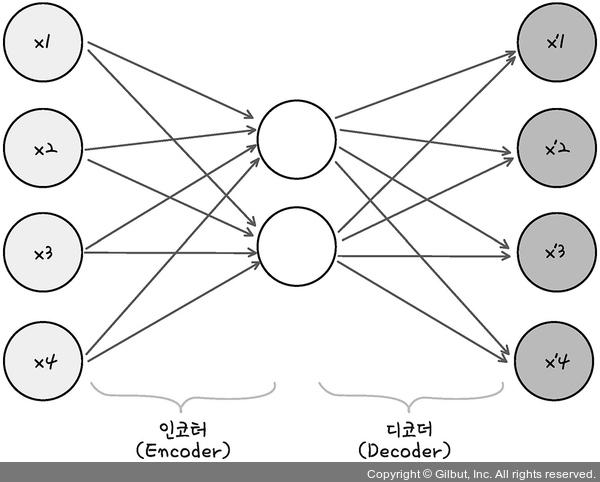

It consisted of two parts, Encoder and Decoder. Encoder and Decoder in the model functionate as a mirror each other.

| Encoder : The encoder takes a variable-length sequence as input and transforms it into a state with a fixed shape. Decoder : It maps the encoded state of a fixed shape to a variable-length sequence. |

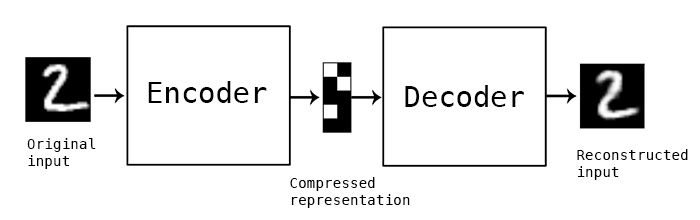

Encoder compressing the input data as much as possible when an input is received in the model, and then restores the compressed data back to its original input shape.

The part of compressing is Encoder, restoring is Decoder.

During the compression, Z is extracted as meaningful data. It can be referred as a latent vector, and other terms such as ‘latent variable’, ‘latent vector’, ‘code’, ‘feature’.

- The reason why it is Auto Encoder

We do not know about latent vector in advance, we just order to the encoder model to compress the data with 3 dimension. After that, the encoder model trying to find latent vector automatically.

Therefore, we call encoder model which extract latent vector automatically is 'Auto Encoder'.

Auto Encoder used in unsupervised learning because unsupervised learning uses unlabeled datasets to find the hidden pattern. For example, to find the latent vectors in MNIST datasets, it needed unsupervised learning for training as there are no label information.

'Deep Learning' 카테고리의 다른 글

| 2. Fundamentals of CNNs and RNNs (0) | 2023.01.31 |

|---|---|

| 1. Fundamentals of CNNs and RNNs (0) | 2023.01.31 |

| encoding vs embedding (0) | 2022.12.08 |

| BPE(Byte Pair Encoding) (0) | 2022.11.30 |

| Terms-text encoding, text decoding, embedding (0) | 2022.09.23 |