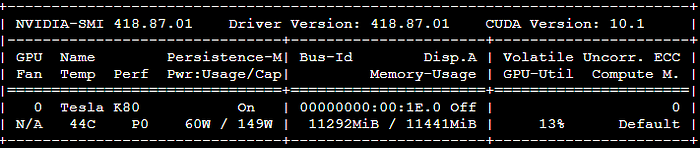

- nvidia-smi

System Management Interface.

Temp: Core GPU temperature is in degrees Celsius. We need not worry about it since it will be controlled by AWS datacentres except to care about your hardware. The above “44C” in the table shown is normal but give a call when it reaches 90+ C.

Perf: Denotes GPU’s current performance state. It ranges from P0 to P12 referring to maximum and minimum performance respectively.

Persistence-M: The value of Persistence Mode flag where “On” means that the NVIDIA driver will remain loaded(persist) even when no active client such as Nvidia-smi is running. This reduces the driver load latency with dependent apps such as CUDA programs.

Pwr: Usage/Cap: It refers to the GPU’s current power usage out of total power capacity. It samples in Watts.

Bus-Id: GPU’s PCI bus id as “domain:bus:device.function”, in hex format which is used to filter out the stats of a particular device.

Disp.A: Display Active is a flag that decides if you want to allocate memory on GPU device for display i.e. to initialize the display on GPU. Here, “Off” indicates that there isn’t any display using a GPU device.

Memory-Usage: Denotes the memory allocation on GPU out of total memory. Tensorflow or Keras(TensorFlow backend) automatically allocates whole memory when getting launched, even though it doesn’t require.

Volatile Uncorr. ECC: ECC stands for Error Correction Code which verifies data transmission by locating and correcting transmission errors. NVIDIA GPUs provide an error count of ECC errors. Here, Volatile error counter detects error count since the last driver loaded.

GPU-Util: It indicates the percent of GPU utilization i.e. percent of the time when kernels were using GPU over the sample period. Here, the period could be between 1 to 1/6th second. For instance, output in table above is shown 13% of the time. In the case of low percent, GPU was under-utilized when if code spends time in reading data from disk (mini-batches).

Compute M.: Compute Mode of specific GPU refers to the shared access mode where compute mode sets to default after each reboot. “Default” value allows multiple clients to access the CPU at the same time.

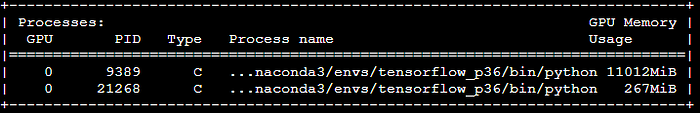

GPU: Indicates the GPU index, beneficial for multi-GPU setup. This determines which process is utilizing which GPU. This index represents the NVML Index of the device.

PID: Refers to the process by its ID using GPU.

Type: Refers to the type of processes such as “C” (Compute), “G” (Graphics), and “C+G” (Compute and Graphics context).

Process Name: Self-explanatory

GPU Memory Usage: Memory of specific GPU utilized by each process.

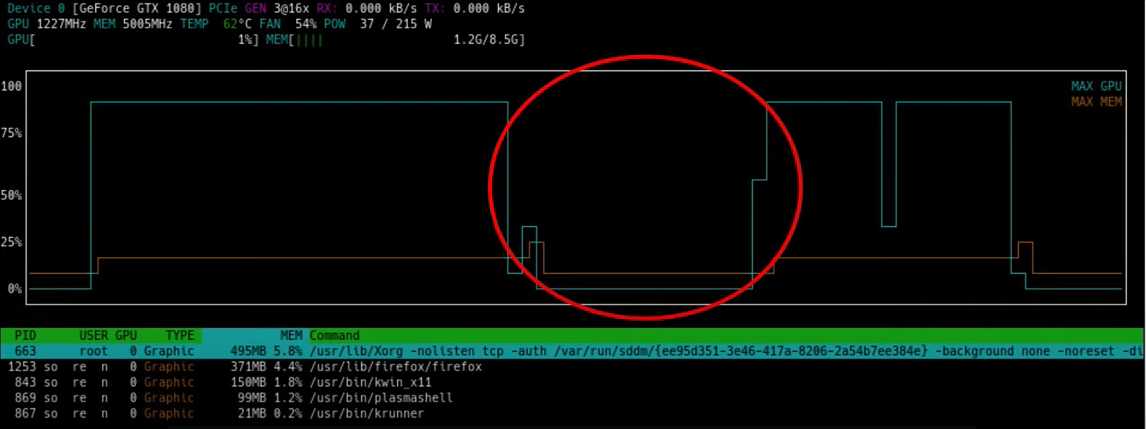

- nvtop

A tool to track the performances of your GPU.

It gives GPU utilization and memory usage over time, simply passes information from nvidia-smi and display it in real time.

If the pattern where the GPU usage drops significantly(red circle), meaning that data loading processes are slower than GPU processing. In this cases, the system is faster to process forward and backward passes than loading the data, and the GPU is just waiting for more data to process.

- How to fix it?

'Linux > Nvidia' 카테고리의 다른 글

| error : cublas needs some free memory when you initialize it (0) | 2022.07.26 |

|---|---|

| nvidia commands (0) | 2022.03.15 |