- Backpropagation

Whereas Logistic Regression is made of a single layer of weights, Neural Networks are made of many layers with non-linear activation.

We need to have a mechanism to update the weights of the neurons using the gradient of the loss. Such a mechanism is called Backpropagation. It propagates the gradient from the last layer to the first one.

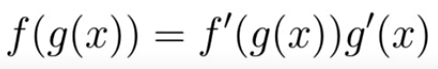

- chain rule

It allows you to decompose the calculation of the derivative of a composite function. It is at the core of the backpropagation algorithm.

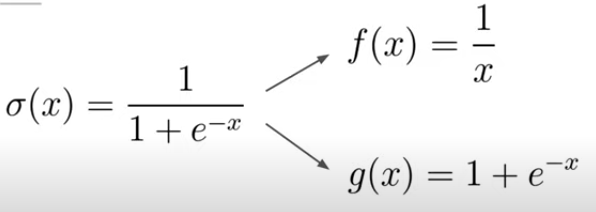

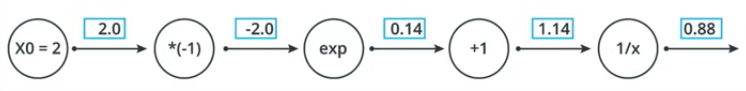

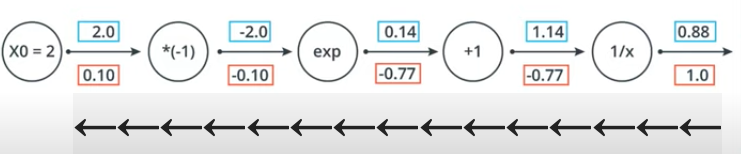

Let's say we are going to Backpropagation for Sigmoid function,

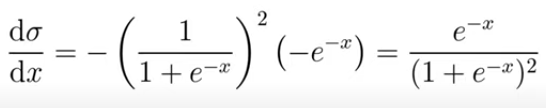

Then, we will get this.

We will get the Backpropagation result as below.

'Machine Learning' 카테고리의 다른 글

| Transfer learning (0) | 2024.01.09 |

|---|---|

| Sigmoid, Softmax, Cross entropy (0) | 2023.12.05 |

| Entropy, Cross-Entropy, Binary cross entropy, SparseCategoricalCrossentropy (0) | 2023.12.01 |

| Variance VS Bias, Bias&Variance Trade-off (0) | 2023.10.31 |

| model.parameters (0) | 2023.01.17 |