- TestError

The error rate of the model on the test dataset.

TestError=Var+Bias+e

- Variance

It quantifies the sensitivity of the model to the training data. In other words, if we were to replace our training dataset with another one, how much would the training error rate change(how much the model's parameters would change)? A low variance means that our model is not sensitive to the training data and generalizes well.

- Bias

It quantifies the quality of the fit of our model on the training data. A low bias means that our model has a very low error rate on the training dataset.

It describes how good the model is given the training data.

- Bias&Variance Trade-off

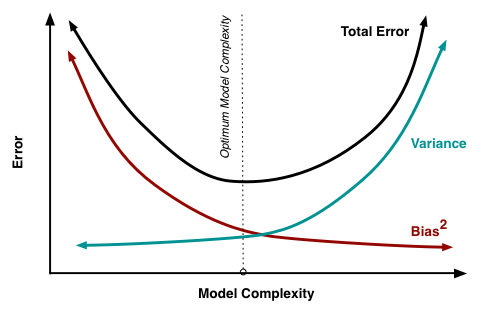

To minimizethe TestError, we need to minimize both of bias and the variance.

As the model becomes more complex, the bias decreases. The model has more capacity to fit the training data.

However, the variance increases with the complexity as the model becomes more specialized to the dataset and loses its capacity to generalize.

If we sum both terms, we obtain the Total Error(TestError).

The Total Error curve shows the existence of an optimal complexity where the Total Error is minimized. This is what we call the Bias&Variance Trade-off.

'Machine Learning' 카테고리의 다른 글

| Sigmoid, Softmax, Cross entropy (0) | 2023.12.05 |

|---|---|

| Entropy, Cross-Entropy, Binary cross entropy, SparseCategoricalCrossentropy (0) | 2023.12.01 |

| model.parameters (0) | 2023.01.17 |

| Supervised vs Unsupervised Learning (0) | 2022.12.12 |

| 3D Tensor(Typical Natural Language Processing) (0) | 2022.08.02 |