- LSTM(Long Short Term Memory)

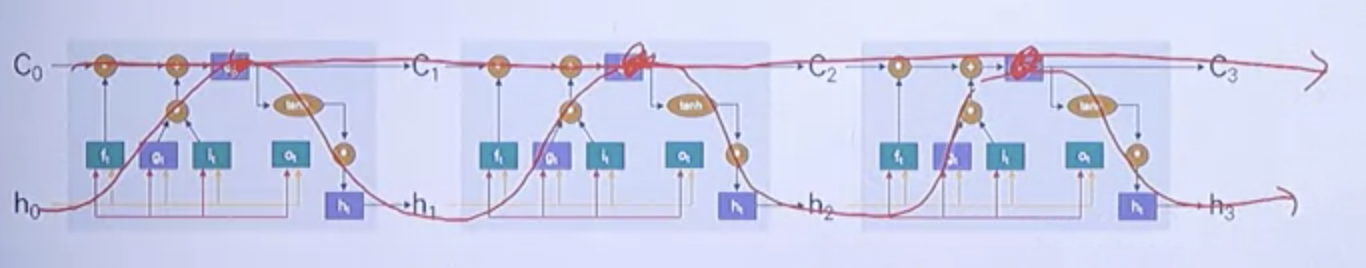

| -The model complemented RNN. -It can over 1000 steps. -It can capture long term dependency. |

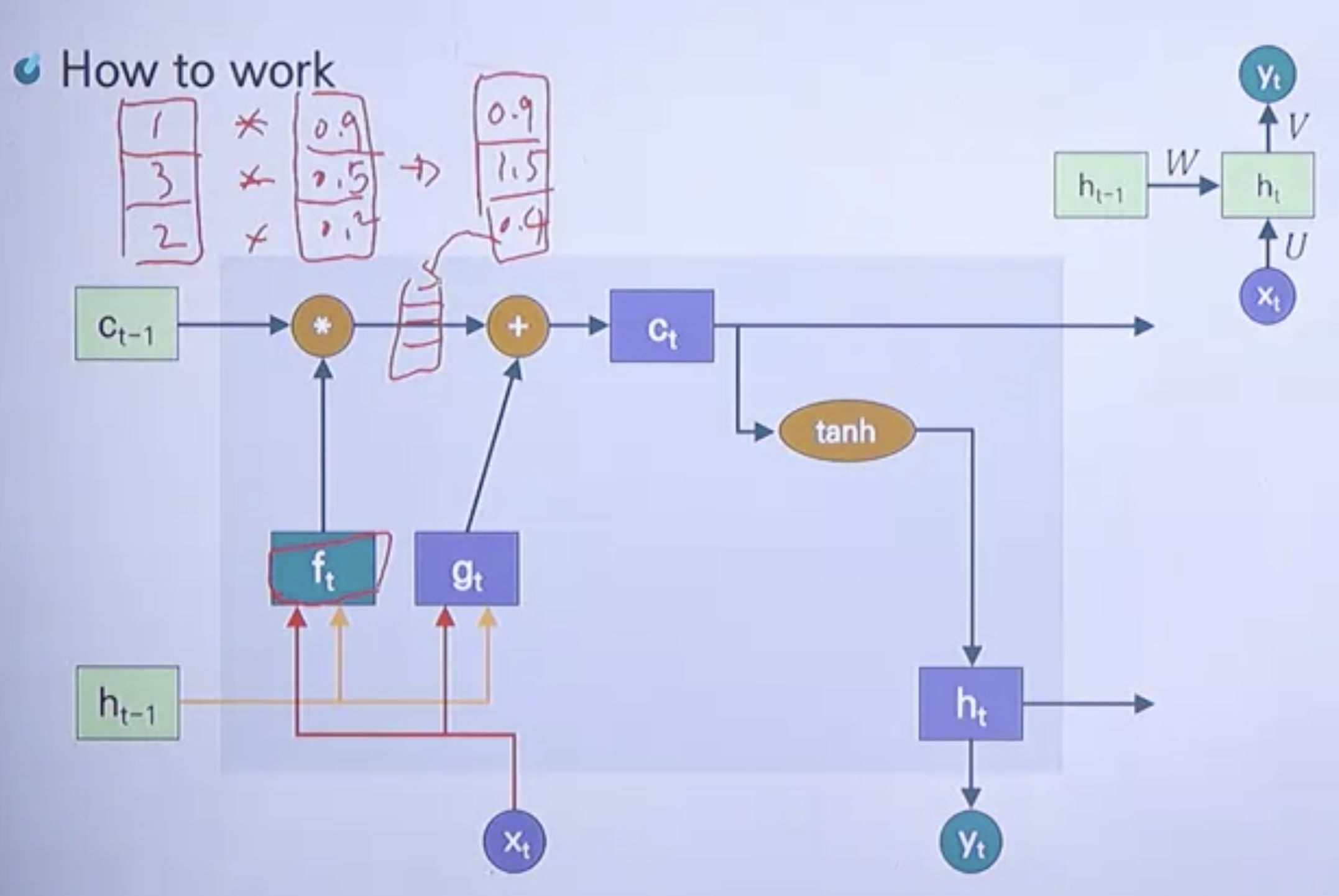

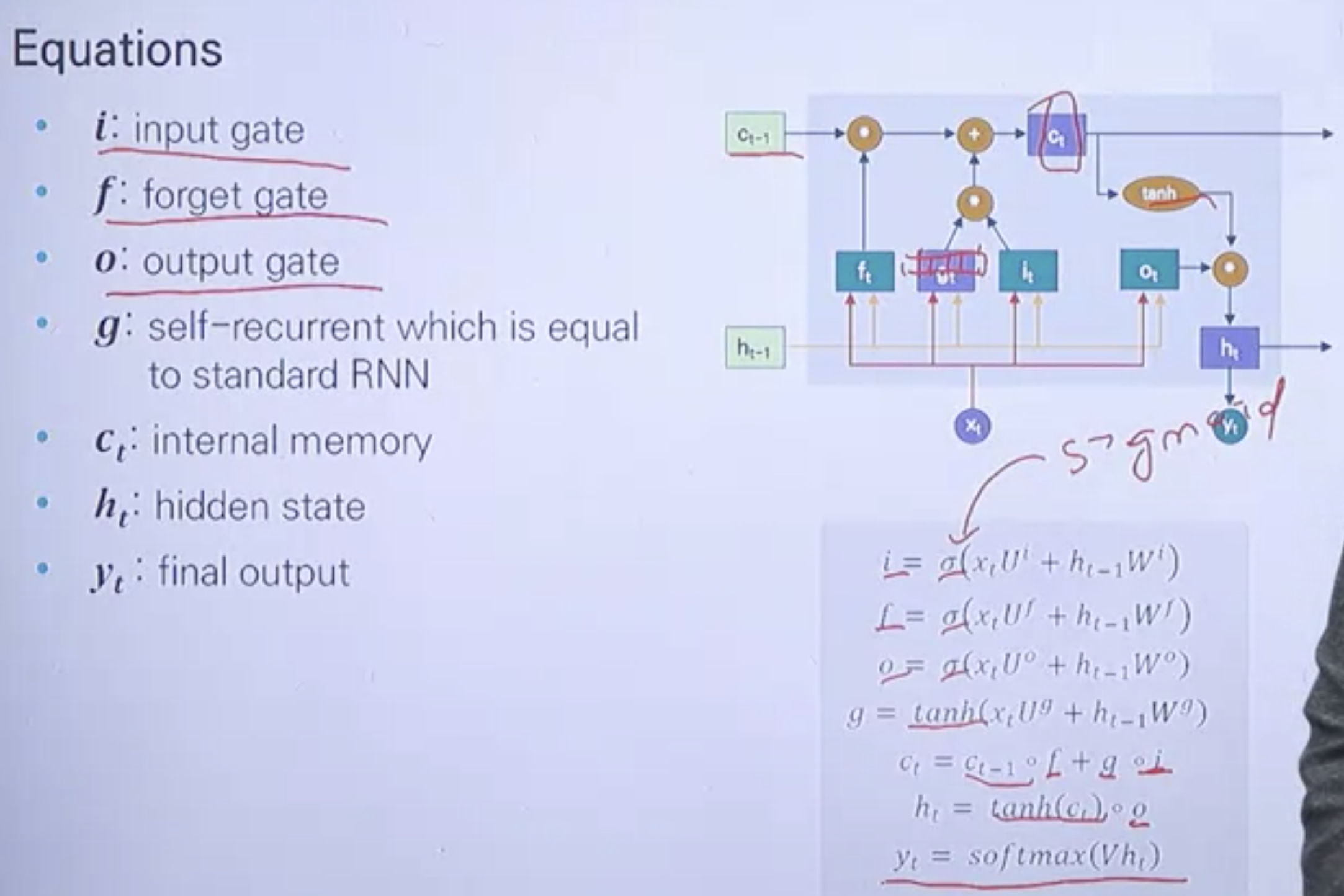

σ is sigmoid(between 0 and 1).

C is a highway, h is a national road.

Therefore, there is less change to occur vanishing gradient.

https://www.coursera.org/learn/cnns-and-rnns/lecture/RJpfH/new-video

1. Input layer that receives 10 inputs

Input(shape=(10,))

2. Words to dense vectors.

vocab_size = 7

embedding_dim = 2

Embedding(vocab_size, embedding_dim, input_length=5)

3. Hidden size setup 256

LSTM(units=256)

4. All timestep hidden state, last timestep hidden state

return_sequences=True

return_state=True

5. Dense(output_dim, input_dim, activation)

model.add(Dense(1, input_dim=1, activation='softmax'))

6. Model(input, output)

tf.keras.Model(inputs=inputs, outputs=outputs)

7. compile

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['acc'])

8. model.fit(x, y, batch_size, epochs)

model.fit(x, y, batch_size=64, epochs=40, validation_split=0.2)