- contiguous

Returns a contiguous in memory tensor containing the same data as self tensor. If self tensor is already in the specified memory format, this function returns the self tensor.

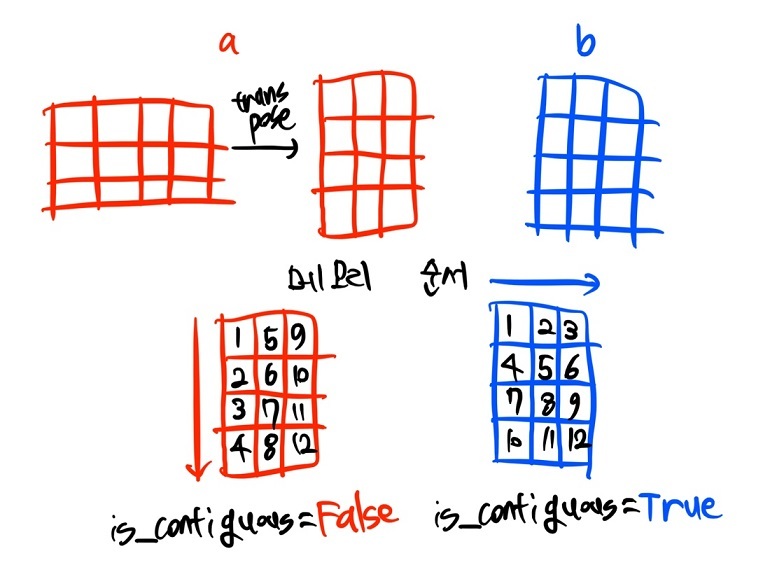

Let's just define two tensors that shape are same.

a = torch.randn(3, 4)

a.transpose_(0, 1)

b = torch.randn(4, 3)

# both tensor shape is (4, 3)

print(a)

>>>

tensor([[-0.7290, 0.7509, 1.1666],

[-0.9321, -0.4360, -0.2715],

[ 0.1232, -0.6812, -0.0358],

[ 1.1923, -0.8931, -0.1995]])'''

print(b)

>>>

tensor([[-0.1630, 0.1704, 1.8583],

[-0.1231, -1.5241, 0.2243],

[-1.3705, 1.2717, -0.6051],

[ 0.0412, 1.3312, -1.2066]])'''

Now, let's call it-Memory address of each tensors a,b-in the order of right direction.

#tensor a

for i in range(4):

for j in range(3):

print(a[i][j].data_ptr())

>>>

94418119497152

94418119497168

94418119497184

94418119497156

...

#tensor b

for i in range(4):

for j in range(3):

print(b[i][j].data_ptr())

>>>

94418119613696

94418119613700

94418119613704

94418119613708

...b is increasing by 4 per line but a is not.

In other words, b is axis=0, memory is stored by right direction,

however a is axis=1, it is stored by going down direction.

a is contiguous=True

b is contiguous=False

We can apply contiguous=True condition.

a.is_contiguous() # False

# change contiguous condition to True

a = a.contiguous()

a.is_contiguous() # True'Deep Learning > PyTorch' 카테고리의 다른 글

| view vs reshape, contiguous vs non-contiguous (0) | 2023.01.11 |

|---|---|

| ERROR: Failed building wheel for pytorch (0) | 2022.12.29 |

| PyTorch-permute vs transpose (0) | 2022.12.05 |

| RNN (0) | 2022.08.22 |

| Word2Vec VS Neural networks Emedding (0) | 2022.08.21 |