- Sequential

- To construct with a sequential structure, a stack from the input layer to the output layer.

- It lets stack the layers one by one.

- It connects several functions such as Wx+b, Sigmoid.

- model.add()

- Adding the layer-an input layer, a hidden layer, and an output layer

- You can construct the model using the various layers(Dense, Conv2D, MaxPooling2D, LSTM, etc.).

- input_shape

- Only specified in the first layer, and the subsequent layers automatically estimate the shape of the input data.

- The tuple must be entered.

- 2d data

input_shape=(num_features,)

For example,

28*28 black and white by each images can be represented

model = Sequential()

model.add(Dense(256, activation='relu', input_shape=(28*28,)))- 3d data

input_shape=(num_time_steps, num_features)

num_time_steps : the number of time steps in sequential data

For example,

390 : time stages per day

3 : each time stages measure 3 characteristics such as stock price, trading volume, index.

model = Sequential()

model.add(LSTM(256, input_shape=(390, 3)))- 4d data

Typically, color image data.

input_shape=(height, width, channel)

model = Sequential()

model.add(Conv2D(32, (3, 3), activation='relu', input_shape=(32, 32, 3)))

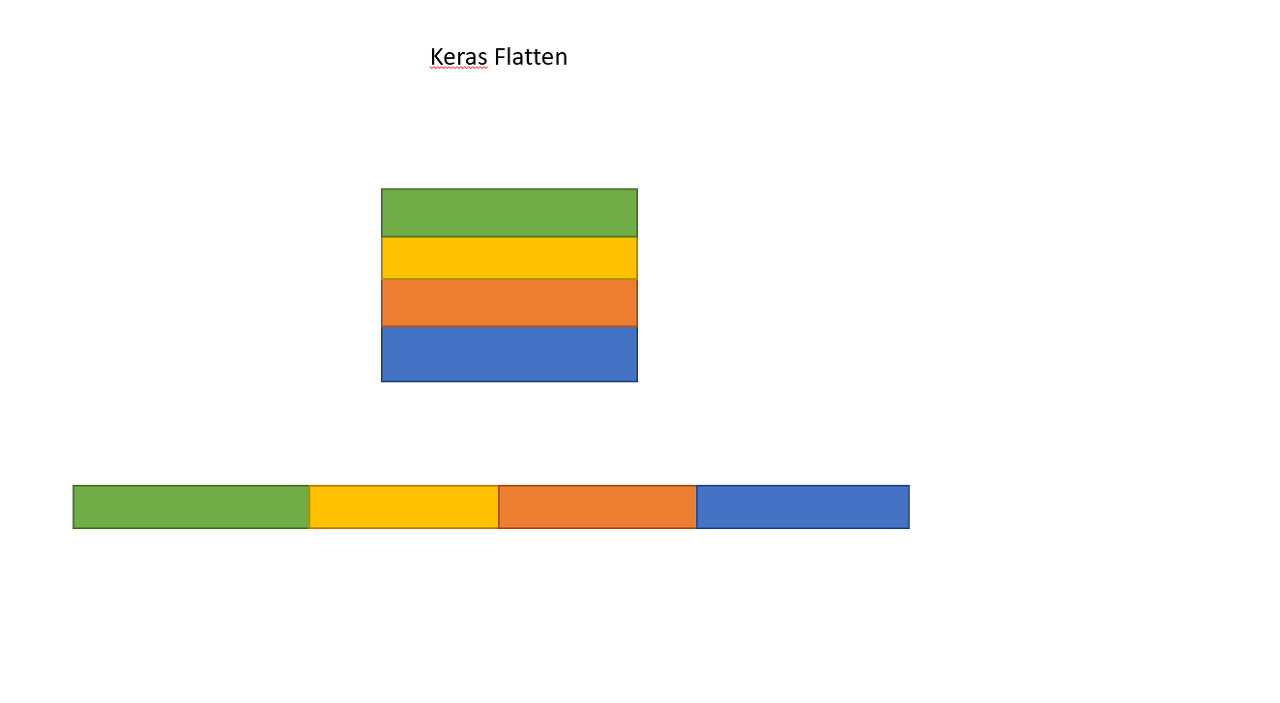

- Flatten

Flatten the input data to one dimension, converting a 2D or 3D feature map to a 1D vectorwithout changing the format. Therefore, it does not have weights.

model = tf.keras.Sequential()

model.add(tf.keras.layers.Conv1D(32,3, input_shape=(100,1)))

model.output_shape#(None, 98, 32)

model.add(MaxPooling1D(2))

model.output_shape#(None, 49, 32)

model.add(Flatten())

model.output_shape#(None, 1568) <- 49*32 = 1568

- Dense

The structure that both input and output neurons are connected.

In image classification, it converts 1d vector by Flatten layer, and then add Dense layer for class probability.

128 : num of output neurons.

tf.keras.Sequential([

tf.keras.layers.Flatten(input_shape=(32, 32, 3)),

tf.keras.layers.Dense(128, activation='relu')

'Deep Learning > Tensorflow' 카테고리의 다른 글

| fit (0) | 2023.12.20 |

|---|---|

| GradientTape (0) | 2023.12.12 |

| reduce_sum, cast, argmax, image_dataset_from_directory, one_hot, reduce_mean, assign_sub, boolean_mask, random.normal, zeros (0) | 2023.12.12 |

| LSTM (0) | 2022.09.15 |

| Keras-Preprocessing, One-hot encoding, Word Embedding , Modeling, Compile (0) | 2021.04.09 |