- Activation function

It takes the output of a neuron and decide whether this neuron is going to fire or not, in other words, "should this neuron 'fire' or not?"

| Activation function | ||

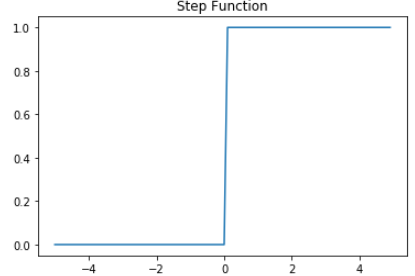

| Step function |  |

Hardly used. |

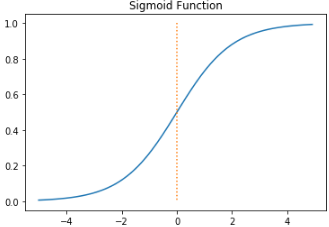

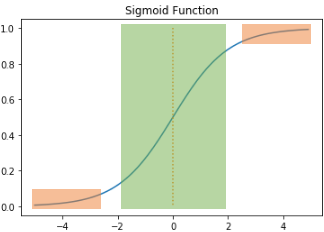

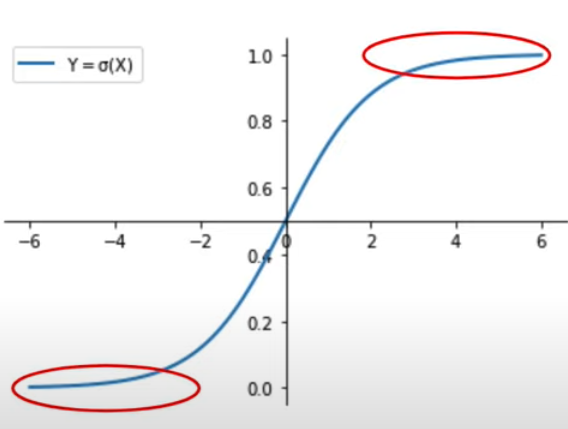

| Sigmoid function |

|

Used in Binary Classification. Used in output layer usually. Vanishing Gradient possible.   |

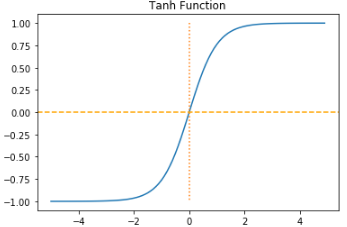

| Hyperbolic tangent function |  |

|

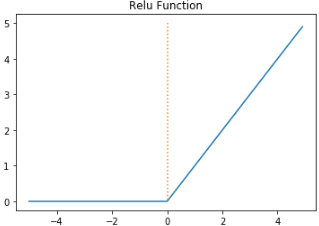

| ReLU(Rectified Linear Unit) function |  f(x) = max(0,x) |

If input : negative -> output : 0 input : positive -> output : input |

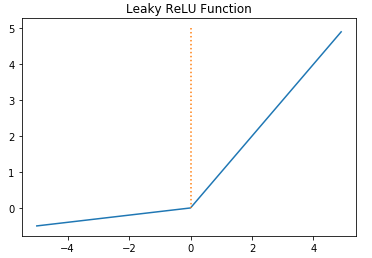

| Leaky ReLU |  |

If input : negative -> output : very small number such as 0.0001 input : positive -> output : input |

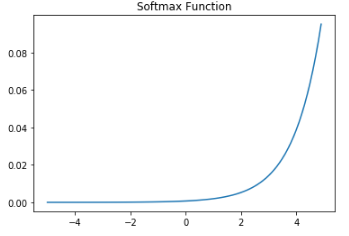

| Softmax function |  |

Used in MultiClass Classification. Used in output layer usually. |

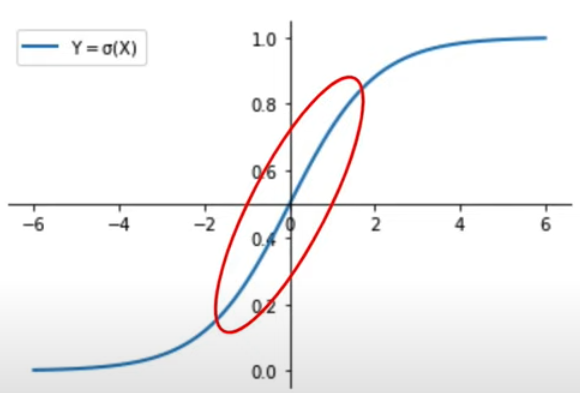

- Sigmoid

- It will limit the value, such as an output of a neuron reaches really high values, which could create a problem for the optimizer.

- But it has a steep slope between -2 and 2 on the x coordinate. So small changes of x in this region will lead to to significant changes in y.

- The gradient will be very small, so it could lead to issues when the Sigmoid is used, as the updates of the model weights, depends on the value of the gradient.

- ReLU(Rectified Linear Unit)

- Dying ReLU-neurons, permanently deactivated : When x is negative, it will output zero, meaning it deactivatese neuron outputting negative values. So the gradient descent algorithm will not perform any updates nor neuron weights anymore.

- When x is positive, it will act as a linear function in x so it can lead to very high values.

- It is differentiable except in zero and nonlinear.

- Leaky ReLU

- It behaves linearly for negative value of x, but the slope coefficient is very small. By doing so, it is not fully deactivating the neuron. We can give the neuron the opportunity to be reactivated.

'Deep Learning' 카테고리의 다른 글

| Word2Vec (0) | 2022.03.29 |

|---|---|

| How to learning of DL (0) | 2022.03.17 |

| sklearn (0) | 2022.03.08 |

| Dropout, Gradient Clipping, Weight Initialization, Xavier, He, Batch Normalization, Internal Covariate Shift, Layer Normalization (0) | 2021.04.08 |

| Forward Propagation, Forward Propagation Computation (0) | 2021.04.07 |