- Jaccard Similarity

- One of Token-based algorithms which is the expected input is a set of tokens, rather than complete strings. The idea is to find the similar tokens in both sets. More the number of common tokens, more is the similarity between the sets. A string can be transformed into sets by splitting using a delimiter. This way, we can transform a sentence into tokens of words or n-grams characters. Note, here tokens of different length have equal importance.

Reference : itnext.io/string-similarity-the-basic-know-your-algorithms-guide-3de3d7346227

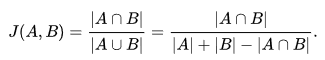

The numerator is the intersection (common tokens).

The denominator is union (unique tokens).

The second case is for when there is some overlap, for which we must remove the common terms as they would add up twice by combining all tokens of both strings.

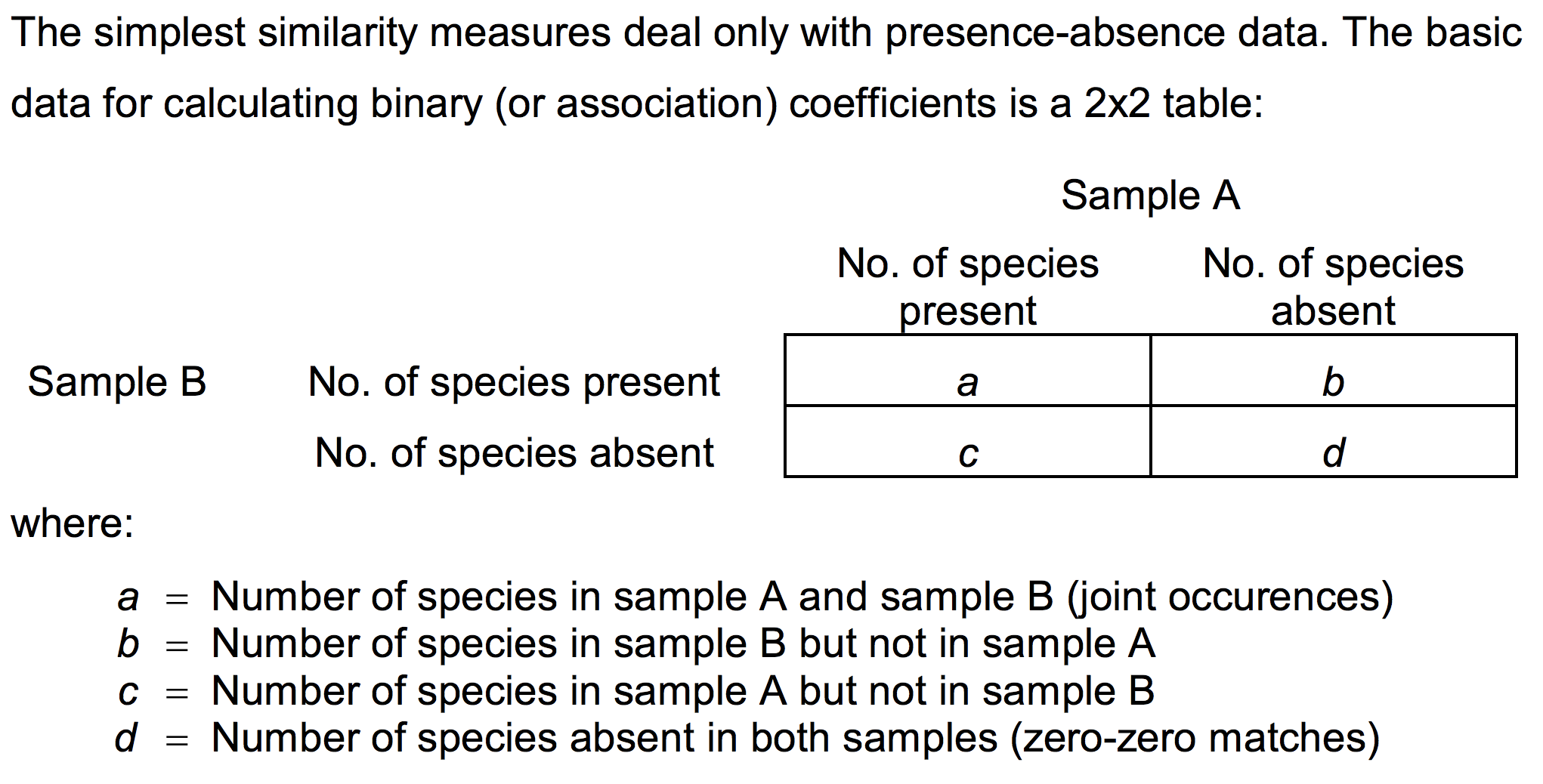

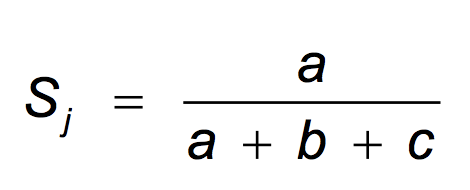

Sj is Jaccard's similarity coefficient

a,b,c is as defined above in presence-absence matrix

Let’s take this.

Reference : www.zoology.ubc.ca/~krebs/downloads/krebs_chapter_12_2017.pdf

- Python Jaccard-index package

import textdistance

t1="hello world".split()

t2="world hello".split()

textdistance.jaccard(t1,t2)

>>>1.0

t1="hello new world".split()

t2="hello world".split()

textdistance.jaccard(t1,t2)

>>>0.6666666666666666I first tokenize the string by default space delimiter, to make words in the strings as tokens. Then I compute the similarity score. In first example, as both words are present in both the strings, the score is 1.

The result will be 1 if t1 and t2 is same, the result will be 0 if there is no common between t1 and t2.

doc1='apple banana everyone like likey watch card holder'

doc2='apple banana coupon passport love you'

tok1=doc1.split()

tok2=doc2.split()

print(tok1)

print(tok2)

>>>['apple', 'banana', 'everyone', 'like', 'likey', 'watch', 'card', 'holder']

['apple', 'banana', 'coupon', 'passport', 'love', 'you']

union=set(tok1).union(set(tok2))

union

>>>{'apple',

'banana',

'card',

'coupon',

'everyone',

'holder',

'like',

'likey',

'love',

'passport',

'watch',

'you'}

intersection=set(tok1).intersection(set(tok2))

intersection

>>>{'apple', 'banana'}

len(intersection)/len(union)

>>>0.16666666666666666This is Jaccard index between doc1 and doc2. 16% Jaccard similarity which is the common word rate.

'Analyze Data > Measure of similarity' 카테고리의 다른 글

| Vector Similarity-2. Euclidean distance (0) | 2021.03.03 |

|---|---|

| Vector Similarity-7. Sørensen similarity (0) | 2021.03.02 |

| Vector Similarity-6. Jaro-Winkler distance (0) | 2021.03.02 |

| Vector Similarity-5. Jaro distance (0) | 2021.02.26 |

| Vector Similarity-4. Levenshtein distance (0) | 2021.02.25 |