- LeNet-5

It is one of the very first convolutional neural networks (CNNs). 'Le' means 'the' in French language, hence LeNet means 'the network'.

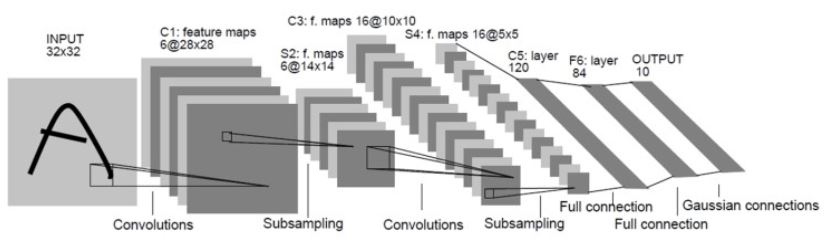

- Every convolutional layer includes three parts: convolution, pooling, and nonlinear activation functions

- Using convolution to extract spatial features (Convolution was called receptive fields originally)

- The average pooling layer is used for subsampling.

- ‘tanh’ is used as the activation function

- Using Multi-Layered Perceptron or Fully Connected Layers as the last classifier

- The sparse connection between layers reduces the complexity of computation

https://medium.com/@siddheshb008/lenet-5-architecture-explained-3b559cb2d52b

https://velog.io/@lighthouse97/LeNet-5%EC%9D%98-%EC%9D%B4%ED%95%B4

- C3 Layer(Convolution)

Step 1.

- Taking inputs from every contiguous subset of 3 feature maps from S2(14*14*6).

- And convolution with a filter(5 x 5 x 3).

- Result : 10 x 10 x 6 of feature map

Step 2.

- Taking inputs from every contiguous subset of 4 feature maps from S2(14*14*6).

- And convolution with a filter(5 x 5 x 4).

- Result : 10 x 10 x 6 of feature map

Step 3.

- Taking inputs from the discontinuous subset of 4 feature maps from S2(14*14*6).

- And convolution with a filter(5 x 5 x 4).

- Result : 10 x 10 x 3 of feature map

Step 4.

- Taking all the feature maps S2(14*14*6).

- And convolution with a filter(5 x 5 x 6).

- Result : 10 x 10 x 1 of feature map

- As a result, obtained 10 x 10 x 16 of feature map(16=6 + 6 + 3 + 1).

- Number of learning parameters : ∑(weight*number of filters+bias)*number of feature map=[(5*5*3 + 1)*6] + [(5*5*4 + 1)*6] + [(5*5*4 + 1)*3] + [(5*5*6 + 1)*1] = 1516

https://towardsai.net/p/deep-learning/the-architecture-and-implementation-of-lenet-5

- S4 Layer(Subsampling)

Step 1.

Taking inputs from C3( 10 x 10 x 16)

Step 2.

Flattening all of them which is going to be a layer with 400 pixels.

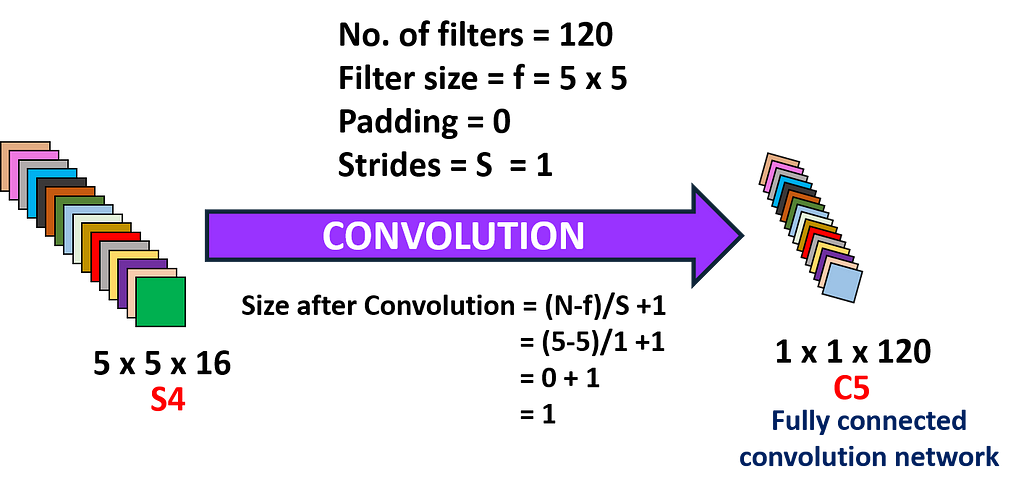

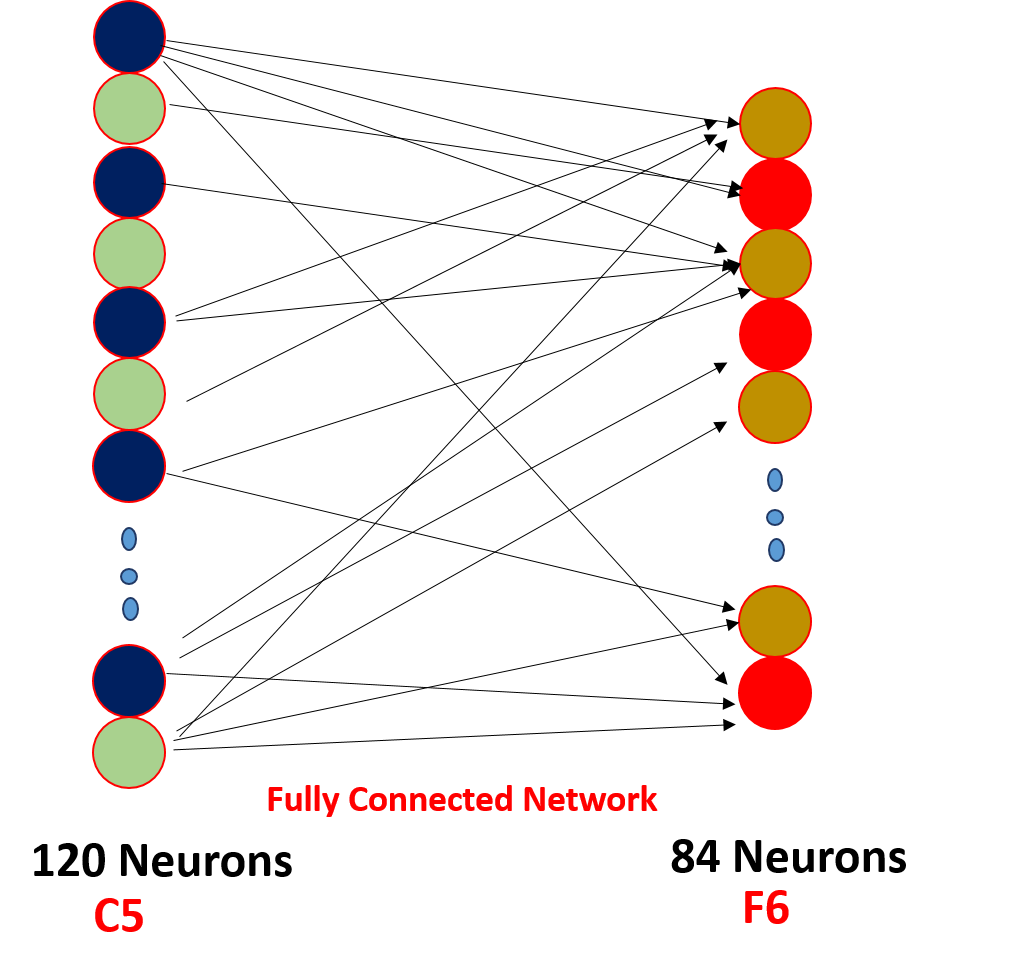

- C5 Layer(Convolution)

- Taking inputs from S4(400 pixels).

- And convolution with a filter(5 x 5 x 120)

- Result : 1 x 1 x 120 of feature map

- Number of learning parameters : (5*5*16 + 1)*120 = 48120

https://www.youtube.com/watch?v=28SQ9wJ74vU

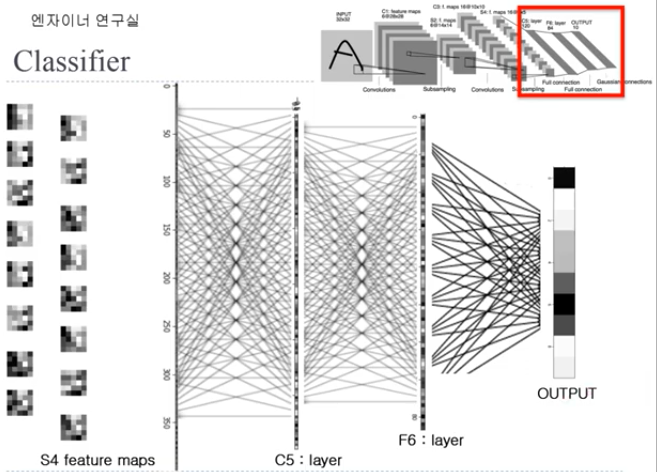

- F6 Layer(Fully-Connected)

- It consists of 84 neurons Fully connected with C5.

- Here dot product between the input vector and weight vector is performed and then bias is added to it.

- The result is then passed through a sigmoidal activation function.

- Number of learning parameters : (120 + 1)*84 = 10164

- Output Layer

- Taking inputs from F6(84 units).

- Apply “softmax activation” function on the output layer.

- Other layers which we saw have “tanh” as the activation function as softmax will give the probability of occurance each output class at the end.

- Output the fully connected softmax output layer with 10 possible values corresponding to the digits from 0 to 9.

def create_network():

net=tf.keras.models.Sequential()

input_shape=[32,32,3]

net.add(Conv2D(6, kernel_size=(5,5), strides=(1,1), activation='tanh', input_shape=input_shape))

net.add(AveragePooling2D(pool_size=(2,2), strides=(2,2)))

net.add(Conv2D(16, kernel_size=(5,5), strides=(1,1), activation='tanh'))

net.add(AveragePooling2D(pool_size=(2,2),strides=(2,2)))

net.add(Flatten())

net.add(Dense(120,activation='tanh'))

net.add(Dense(84,activation='tanh'))

net.add(Dense(43))'Deep Learning > CNN' 카테고리의 다른 글

| (prerequisite-CNN) Graph, Vertex(Node), Edge (0) | 2024.01.19 |

|---|---|

| ResNet (0) | 2024.01.03 |

| AlexNet (0) | 2024.01.03 |

| VGG16 (0) | 2023.12.14 |

| (prerequisite-RoIs) Interpolation, Linear Interpolation, Bilinear Interpolation, ZOH(Zero-order Hold Interpolation) (0) | 2023.07.04 |