- VGG16

Visual Geometry Group 16 Layers deep. A convolutional neural network that is 16 layers deep.

Initially, VGG is a model designed to study how the depth of neural networks affects. So, the filter size of the convolution layer was fixed at the smallest 3x3. This is because if the filter size is large, the image size quickly becomes small and it is difficult to make the depth.

Models before VGG used 11x11 or 7x7 filters with relatively large receptive fields.

But the VGG model used only 3x3 filters, which improved the accuracy of image classification.

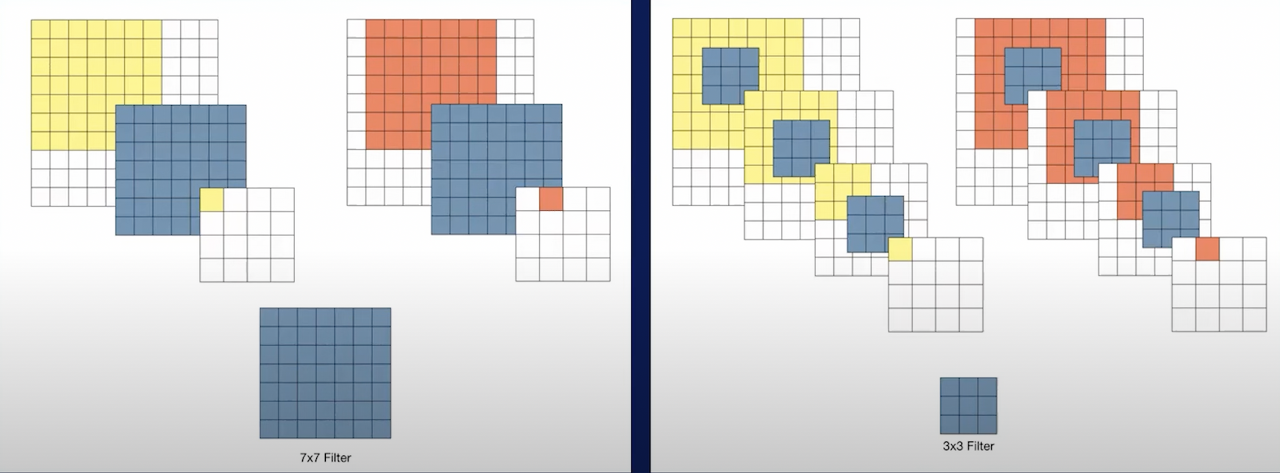

Let's say, there is a 10x10 image, the convolution was carried out using a 7x7 filter on the left and a 3x3 filter on the right.

The one using 7x7 filter performed convolution 1 and the one using 3x3 filter performed convolution 3 times.

However, we can see the feature map is the same as 4*4.

- a deep neural network

- 3x3 convolution layer

- Increasing nonlinearity

- The ReLU operation is applied once when the convolution is performed with a 7*7 filter,

whereas the ReLU operation is applied three times when the convolution is performed with a 3*3 filter.

Therefore, as the layer increases, nonlinearity increases, the discrimination of model features increases.

- Reducing Learning Parameters

- The numbers of parameters with a 7*7 filter(7*7) : 49

- The numbers of parameters with a 3*3 filter(3*3*3) : 27

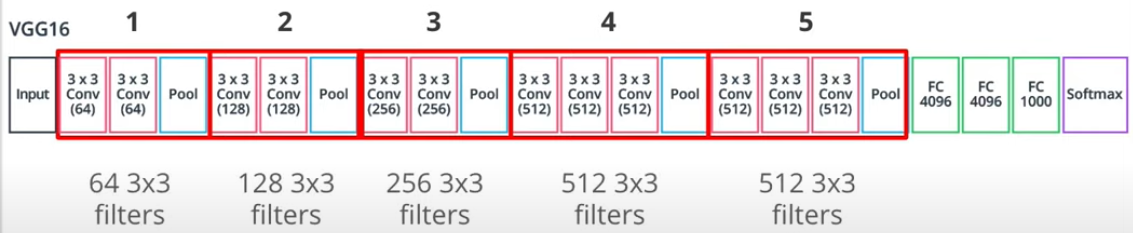

- Structure of VGG16

- 13 Convolution Layers + 3 Fully connected Layers

- 3x3 convolution filters

- 1 stride & 1 padding

- 2x2 max pooling (2 stride)

- ReLU

- Convolution block of VGG16

It is made of stacked convolutional layer followed by a pooling layer. It has 5 Convolution blocks. And each convolutional layer in a block has the same filter size 3*3. The number of filters doubles for each conv block.

- Receptive Field of VGG16

'Deep Learning > CNN' 카테고리의 다른 글

| LeNet-5 (0) | 2024.01.04 |

|---|---|

| ResNet (0) | 2024.01.03 |

| AlexNet (0) | 2024.01.03 |

| (prerequisite-RoIs) Interpolation, Linear Interpolation, Bilinear Interpolation, ZOH(Zero-order Hold Interpolation) (0) | 2023.07.04 |

| CNN (0) | 2023.01.20 |