- Regression

A prediction comes out within a range of any continuous value such as weight, age, speed.

- Linear Regression

It is used for finding linear relationship between dependent and one or more predictors.

| x------>y affect x : affect to y, predictor or independent variable y : subordinated by x, response or dependent variable |

1. Simple Linear Regression

Presume one x.

| y=Wx+b W : Weight, gradient b : Bias, intercept |

2. Multiple Linear Regression

Presume more than one x.

| y=W1x1+W2x2+...Wnxn+b |

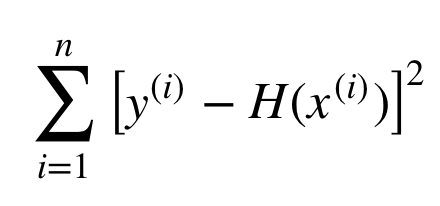

3. Cost function(Loss function, Objective function)

Error=real value-predict value |

| Minimize the error. W,b→minimize cost(W,b) 0 is the best!  |

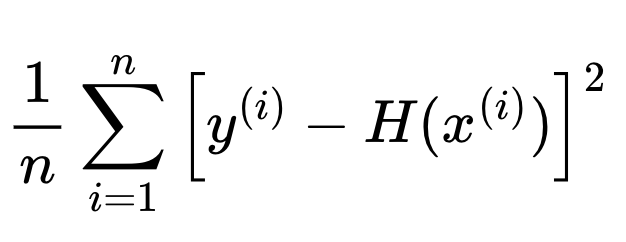

4. MSE

Mean Squared Error.

|

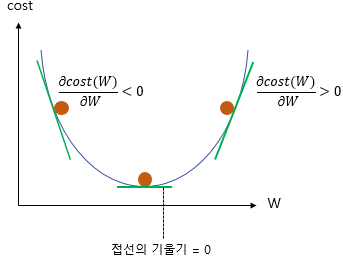

5. Optimizer(Gradient Descent)

The process to find W,b for minimizing cost function.

'Machine Learning' 카테고리의 다른 글

| Entropy, Cross-Entropy (0) | 2021.03.31 |

|---|---|

| Support Vector Machine, Margin, Kernel, Regularization, Gamma (0) | 2021.03.30 |

| Softmax (0) | 2021.03.24 |

| Logistic Regression, Sigmoid function (0) | 2021.03.17 |

| Scalar vs Vector vs Matrix vs Tensor (0) | 2021.03.17 |