- LM

Language Model, is a model assigns probability to sequence in order to modeling language, in other words, finding the most natural word sequence

- Language Modeling

Prediction to unknown word from given words.

- Conditional Probability

It is the probability of an event occurring given that another event has already occurred. In this theory, mutually exclusive events are events that cannot occur simultaneously.

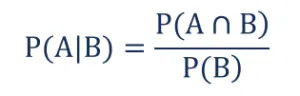

The probability of event A occurring given that event B has already occurred

- P(A|B) – the conditional probability; the probability of event A occurring given that event B has already occurred

- P(A ∩ B) – the joint probability of events A and B; the probability that both events A and B occur

- P(B) – the probability of event B

Pr(A|B)

- B : assume B occurred

- A : conditional probability to A occurs assums B occurred

Probability occurance w5 assumed w1 to w4 occurred

- P : Probability

- w : word

- SLM

Statistical Language Model

- n-gram

It is a sequence of n words, for example,

Please turn your homework ...

a 2-gram (which we’ll call bigram) is a two-word sequence of words like “please turn”, “turn your”, or ”your homework”, and a 3-gram (a trigram) is a three-word sequence of words like “please turn your”, or “turn your homework”. We’ll see how to use n-gram models to estimate the probability of the last word of an n-gram given the previous words, and also to assign probabilities to entire sequences.

'Deep Learning' 카테고리의 다른 글

| FFNN, RNN, FCNNs (0) | 2021.04.05 |

|---|---|

| Perceptron, Step function, Single-Layer Perceptron, Multi-Layer Perceptron, DNN (0) | 2021.03.31 |

| LSA, SVD, Orthogonal matrix, Transposed matrix, Identity matrix, Inverse matrix, Diagonal matrix, Truncated SVD (0) | 2021.03.11 |

| Bag of words(BoW), DTM, TDM, TF-IDF (0) | 2021.03.10 |

| normalization, WordNetLemmatizer, PorterStemmer, LancasterStemmer, Storword (0) | 2021.03.05 |