- Perceptron

It is a linear classifier, an algorithm for supervised learning of binary classifiers.

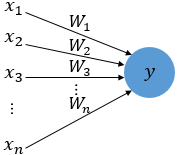

Input(multiple x)-->Output(one y)

x : input

W : Weight

y : output

Each x has each weights. Larger w, more important x.

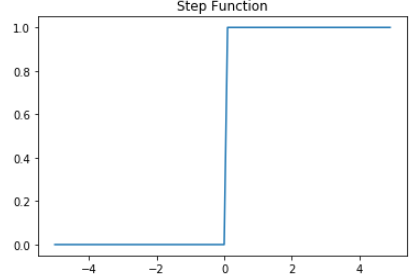

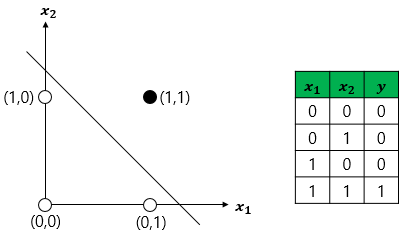

- Step function

∑W * x >=threshold(θ)-->output(y) : 1

∑W * x <threshold(θ)-->output(y) : 0

Threshold(θ) can be expressed b(bias) such as

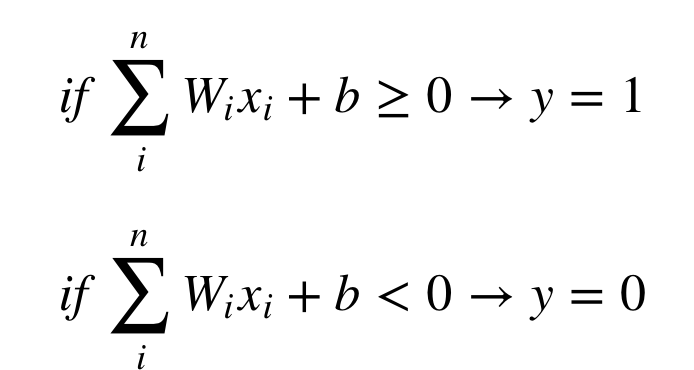

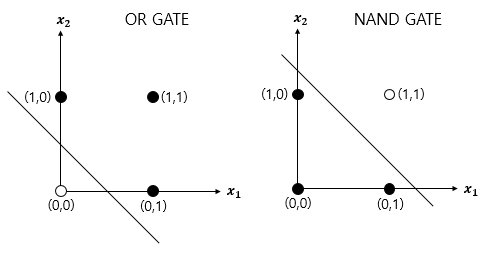

- Single-Layer Perceptron

It can learn only linearly separable patterns.

- And gate

def AND_gate(x1,x2):

w1=0.5

w2=0.5

b=-0.7

result=x1*w1+x2*w2+b

if result<=0:

return 0

else:

return 1

AND_gate(0,0),AND_gate(0,1),AND_gate(1,0),AND_gate(1,1)

>>>

(0, 0, 0, 1)

- Nand gate

def NAND_gate(x1,x2):

w1=-0.5

w2=-0.5

b=0.7

result=x1*w1+x2*w2+b

if result<=0:

return 0

else:

return 1

NAND_gate(0,0),NAND_gate(0,1),NAND_gate(1,0),NAND_gate(1,1)

>>>

(1, 1, 1, 0)

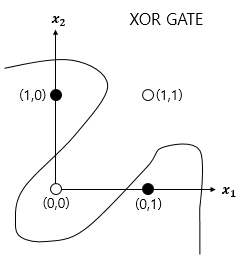

- Multi-Layer Perceptron

It can learn non-linearly(curb) separable patterns. It enables you to distinguish between the two linearly separable classes.

- DNN

Deep Neural Network, there are more than 2 hidden layers.