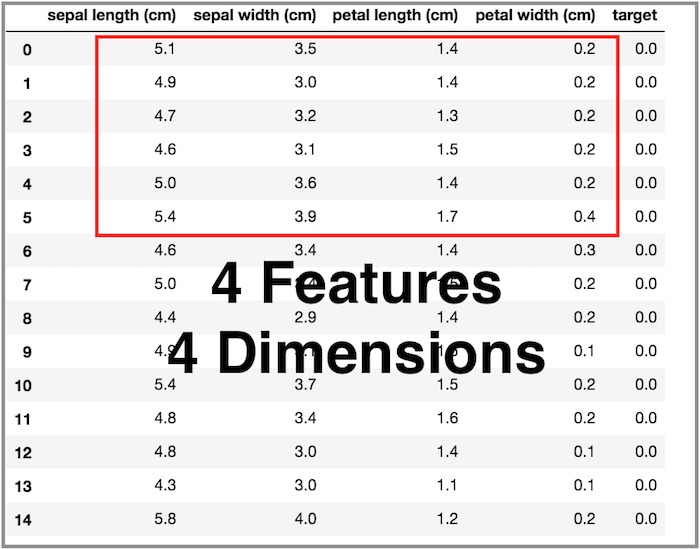

- Feature(Independent variable 'x')

Each features such as 5.1, 3.5, 1.4, 0.2.

The number of features are 4.

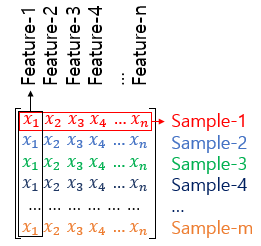

- Sample(Instance, Example, Case, Record, Data Point, Row

Case 1.

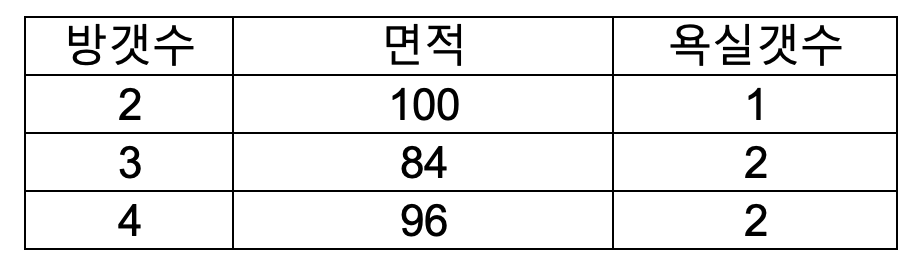

\( \text{1st sample} = \begin{bmatrix} 2 \\ 100 \\ 1 \end{bmatrix} = (2,\,100,\,1) \)

Case 2.

- The number of Independent variable 'x' : The number of samples * The number of features = 5 * 3 = 15, \( \begin{pmatrix} x_{11} & x_{12} & x_{13} \\ x_{21} & x_{22} & x_{23} \\ x_{31} & x_{32} & x_{33} \\ x_{41} & x_{42} & x_{43} \\ x_{51} & x_{52} & x_{53} \end{pmatrix} \)

- Then, \( H(X) = XW + B \) can be expressed to \(\begin{bmatrix} x_{11} & x_{12} & x_{13} \\ x_{21} & x_{22} & x_{23} \\ x_{31} & x_{32} & x_{33} \\ x_{41} & x_{42} & x_{43} \\ x_{51} & x_{52} & x_{53} \end{bmatrix} \begin{bmatrix} w_1 \\ w_2 \\ w_3 \end{bmatrix} + \begin{bmatrix} b \\ b \\ b \\ b \\ b \end{bmatrix} = \begin{bmatrix} x_{11}w_1 + x_{12}w_2 + x_{13}w_3 + b \\ x_{21}w_1 + x_{22}w_2 + x_{23}w_3 + b \\ x_{31}w_1 + x_{32}w_2 + x_{33}w_3 + b \\ x_{41}w_1 + x_{42}w_2 + x_{43}w_3 + b \\ x_{51}w_1 + x_{52}w_2 + x_{53}w_3 + b \end{bmatrix} \)

- The size of 'W' should be 'The size of the X column must match the size of the Y column', therefore W = 3 * 1 in the case above.

'Machine Learning' 카테고리의 다른 글

| Density-based spatial clustering of applications with noise (DBSCAN) (0) | 2025.11.08 |

|---|---|

| K-Means Clustering(KMC) (0) | 2025.11.07 |

| Transfer learning (0) | 2024.01.09 |

| Backpropagation, chain rule (0) | 2023.12.14 |

| Sigmoid, Softmax, Cross entropy (0) | 2023.12.05 |