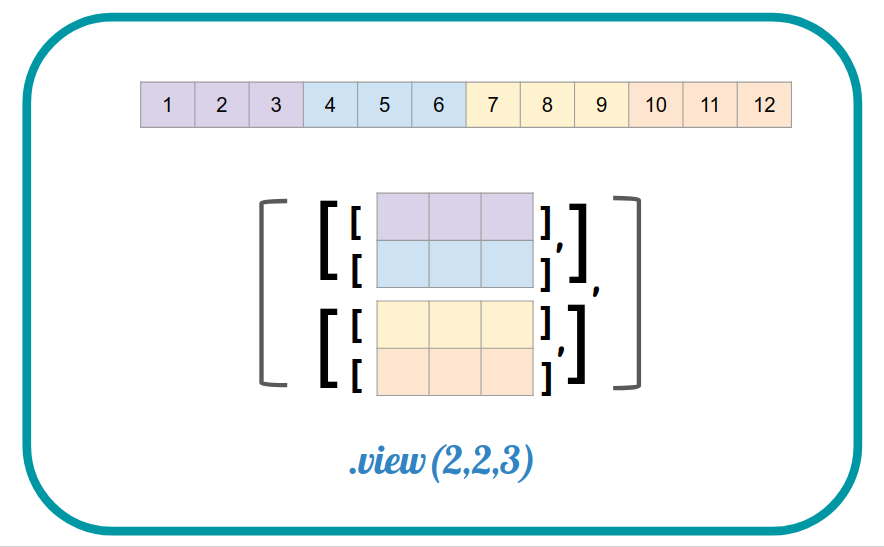

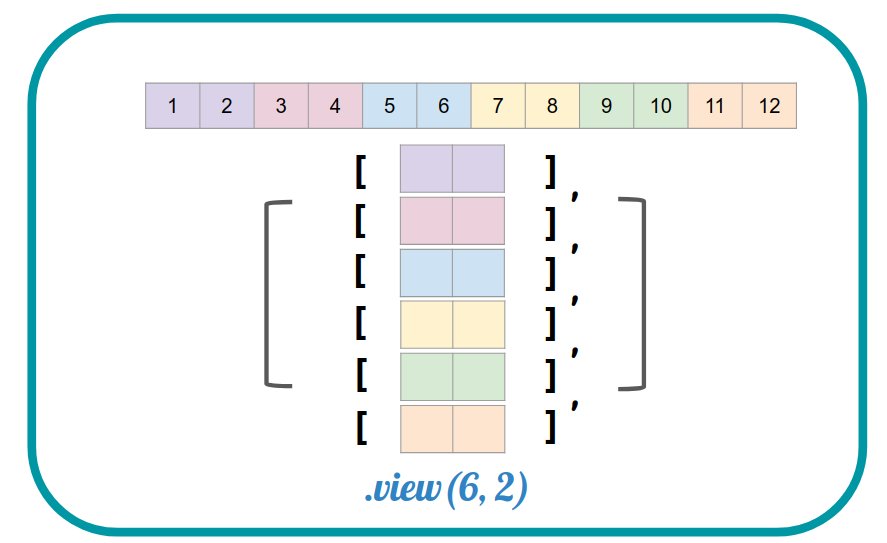

- view

It merely creates a view of the original tensor. Same function as reshape in numpy.

-1 : It means I am not sure so I will let pytorch to decide the dimension.

torch.FloatTensor([[[1.,2.],[3.,4.]],[[5.,6.],[7.,8.]]])

>>>

tensor([[[1., 2.],

[3., 4.]],

[[5., 6.],

[7., 8.]]])

t.view([-1,2])

>>>

tensor([[1., 2.],

[3., 4.],

[5., 6.],

[7., 8.]])a=torch.randn(1,2,3,4)

a

>>>

tensor([[[[ 0.3861, -0.2761, -2.0326, 0.1494],

[ 0.4762, -0.3741, 1.5705, -0.7584],

[ 0.4935, -0.9561, -0.5429, 2.1391]],

[[ 0.1052, -0.4533, -1.4188, 1.7282],

[-0.1203, -1.3732, 0.4870, -1.5323],

[-0.6002, -0.1653, 2.3912, -2.3870]]]])

a.view(1,3,2,4)

>>>

tensor([[[[ 0.3861, -0.2761, -2.0326, 0.1494],

[ 0.4762, -0.3741, 1.5705, -0.7584]],

[[ 0.4935, -0.9561, -0.5429, 2.1391],

[ 0.1052, -0.4533, -1.4188, 1.7282]],

[[-0.1203, -1.3732, 0.4870, -1.5323],

[-0.6002, -0.1653, 2.3912, -2.3870]]]])

The change of value in a view tensor would simultaneously change the value in the original tensor, because the view tensor and its original tensor share the same chunk of memory block.

# When data is contiguous

x = torch.arange(1,13)

x

>> tensor([ 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12])

# Reshape returns a view with the new dimension

y = x.reshape(4,3)

y

>>

tensor([[ 1, 2, 3],

[ 4, 5, 6],

[ 7, 8, 9],

[10, 11, 12]])

# How do we know it's a view? Because the element change in new tensor y would affect the value in x, and vice versa

y[0,0] = 100

y

>>

tensor([[100, 2, 3],

[ 4, 5, 6],

[ 7, 8, 9],

[ 10, 11, 12]])

print(x)

>>

tensor([100, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12])

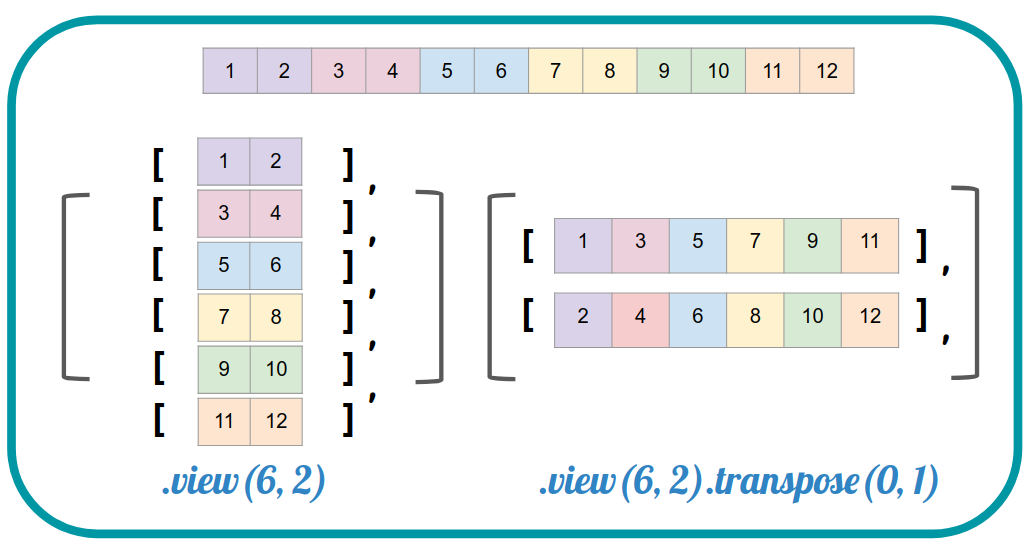

This follows a contiguous order.

It does not work on non-contiguous order.

# After transpose(), the data is non-contiguous

x = torch.arange(1,13).view(6,2).transpose(0,1)

x

>>

tensor([[ 1, 3, 5, 7, 9, 11],

[ 2, 4, 6, 8, 10, 12]])

# Try to use view on the non-contiguous data

y = x.view(4,3)

y

>>

-------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

----> 1 y = x.view(4,3)

2 y

RuntimeError: view size is not compatible with input tensor's size and stride (at least one dimension spans across two contiguous subspaces). Use .reshape(...) instead.

- reshape

It returns a view when the data is contiguous.

# When data is contiguous

x = torch.arange(1,13)

x

>> tensor([ 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12])

# Reshape returns a view with the new dimension

y = x.reshape(4,3)

y

>>

tensor([[ 1, 2, 3],

[ 4, 5, 6],

[ 7, 8, 9],

[10, 11, 12]])

# How do we know it's a view? Because the element change in new tensor y would affect the value in x, and vice versa

y[0,0] = 100

y

>>

tensor([[100, 2, 3],

[ 4, 5, 6],

[ 7, 8, 9],

[ 10, 11, 12]])

print(x)

>>

tensor([100, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12])

It works but when the data is non-contiguous, the change in the new tensor would not affect the value in the original tensor.

# After transpose(), the data is non-contiguous

x = torch.arange(1,13).view(6,2).transpose(0,1)

x

>>

tensor([[ 1, 3, 5, 7, 9, 11],

[ 2, 4, 6, 8, 10, 12]])

# Reshape() works fine on a non-contiguous data

y = x.reshape(4,3)

y

>>

tensor([[ 1, 3, 5],

[ 7, 9, 11],

[ 2, 4, 6],

[ 8, 10, 12]])

# Change an element in y

y[0,0] = 100

y

>>

tensor([[100, 3, 5],

[ 7, 9, 11],

[ 2, 4, 6],

[ 8, 10, 12]])

# Check the original tensor, and nothing was changed

x

>>

tensor([[ 1, 3, 5, 7, 9, 11],

[ 2, 4, 6, 8, 10, 12]])

- transpose

Non-Contiguous, swapping the way axis1 and axis2 strides.

It is not following a contiguous order anymore. It does not fill the sequential data one-by-one from the innermost dimension, and jump to the next dimension when filled up.

It is a non-contiguous ‘View’. It changes the ways of strides on the original data, and any data modification on the original tensor would affect the view, and vice versa.

# Change the value in a transpose tensor y

x = torch.arange(0,12).view(2,6)

y = x.transpose(0,1)

y[0,0] = 100

y

>>

tensor([[100, 2, 4, 6, 8, 10],

[ 1, 3, 5, 7, 9, 11]])

# Check the original tensor x

x

>>

tensor([[100, 1],

[ 2, 3],

[ 4, 5],

[ 6, 7],

[ 8, 9],

[ 10, 11]])

- contiguous()

Convert a Non-Contiguous Tensor (or View) to Contiguous.

It makes a copy of the original ‘non-contiguous’ tensor, and then save it to a new memory chunk following the contiguous order.

x = torch.arange(0,12).view(2,6)

x.is_contiguous()

>> True

y = x.transpose(0,1)

y.is_contiguous()

>> False

z = y.contiguous()

z.is_contiguous()

>> TRUE

Summary

|

'Deep Learning > PyTorch' 카테고리의 다른 글

| model.eval() VS model.train() (0) | 2023.01.17 |

|---|---|

| ERROR: Failed building wheel for pytorch (0) | 2022.12.29 |

| Pytorch-contiguous (0) | 2022.12.05 |

| PyTorch-permute vs transpose (0) | 2022.12.05 |

| RNN (0) | 2022.08.22 |